Imbalanced data is a term used in data science; it means that classes are represented unequally within a dataset. There are different examples of machine learning applications where encountering an imbalanced dataset is quite regular. One example of such an application is fraud detection, where banks will scan a lot of transactions to detect fraud. In reality, only a very small amount of the transactions will turn out to be fraudulent.

When applying standard machine learning algorithms to this type of datasets, we find that models show poor predictive performance, especially in minor class samples (i.e. the class that has fewer samples). The reason is that most of these algorithms are developed under the assumption of equal representation of classes. Therefore, to efficiently understand and learn from such data, we need to use specific tools and techniques.

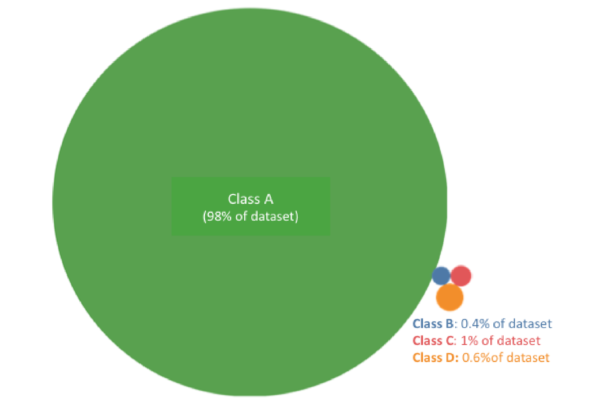

In this blog post, we are considering an example from healthcare where the minority class(es) is outnumbered and also extremely rare! Figure 1 shows the bubble diagram of this dataset. Class A is the healthy subject class and classes B, C and D are patients with different symptoms. Overall, the patients form 2% of dataset.

There are several techniques and tools that help to learn from imbalanced dataset and design an algorithm that shows acceptable classification performance both in major and minor classes. The followings are the most common ones:

- Introduce more performance metrics:

Classification accuracy criterion is simply not enough and often misleading. The classifier shows great performance on detecting the major class and poor performance in detecting the minor class, known as overfitting. However, this poor performance is not shown in the overall accuracy, because the number of samples of the minority class are much lower than the majority class. We need more metrics to clear the air and show the true performance such as:

– Precision, recall and F1-score calculated using confusion matrix,

– Receiver Operating Characteristics (ROC) curve,

– Precision-Recall (PR) curve.

- Resample the data:

Being the most common technique in dealing with imbalanced datasets, resampling methods aim to change the class distribution to help classifiers learn the classes equally. There are three main resampling categories [1] as follows:

– Under-sampling: creating a subset of the original dataset by eliminating data samples (usually from the majority class),

– Over-sampling: creating a superset of the original dataset by adding copies of data samples or generating new samples from existing ones (usually from the minority class),

– Hybrid methods: combining both over- and under-sampling.

With the various methods each one has their own cons and pros. For instance, when trying random under-sampling, there is a possible drawback that useful data get eliminated! Random over-sampling in extreme imbalanced cases can lead to overfitting. Interestingly in our case, resampling methods couldn’t solve the imbalance problem, because classes B, C, and D were extremely underrepresented! Even severe down-sampling of class A didn’t help.

- Use different models:

Don’t insist on one classification algorithm. It is always useful to get a quick assessment of a bunch of different algorithms and know what to keep and what to discard. For example, it is believed that decision trees outperform other algorithms in dealing with imbalanced dataset. However, it is only true if the minority class samples are all in one area of the feature space (then they all will be partitioned into one node!) [2]

- Try cost-sensitive learning:

If you are stuck with a dataset that resampling methods don’t offer much, try cost-sensitive learning. Here, a cost is imposed on the mistakes in the minority class to divert the attention of the classifier to this class. Therefore, by influencing the training phase, we try to improve the generalization of the algorithm. In our case, using the cost-sensitive algorithms gave a noticeable boost to our algorithm’s performance.

- Try anomaly detection:

When we identify the outliers or abnormal samples in a dataset, it is called anomaly detection. Anomaly detection is an example of many applications for one-class classification (OCC). OCC is designed for scenarios where we have access to samples from (mainly) one class [1]. Then, a one-class classifier is trained using the samples from the majority class as the target. Therefore, the samples of minority class are treated as outliers. This interesting approach is especially useful in binary classification and in scenarios where the minority class lacks any structure.

- Gather more data (if possible)!

It might sound strange at first, but sometimes it is possible to acquire more data for the minority class. For example, in our case we simply got more data from minority classes by changing the data selection filters. However, this is not always the case, especially if the imbalance is intrinsic (i.e. due to the nature of the dataset).

In general, there is no rule of thumb that tells which method will boost the performance; it depends on the type of imbalance and also complexity of data. It is better to first understand the data by taking small and simple steps such as applying basic classification methods, adjusting the parameters and carefully watching the change in performance. Usually, after this step you will have an idea about your data. Then, apply resampling methods. In our experience, usually a combination of different methods works best. Try different techniques and see which works. Good luck!

References

[1] A. Fernández, S. García, M. Galar, R. C. Prati, B. Krawczyk, and F. Herrera, Learning from Imbalanced Data Sets. 2018.

[2] F. T. Liu, K. M. Ting, and Z. H. Zhou, “Isolation forest,” in Proceedings – IEEE International Conference on Data Mining, ICDM, 2008.